Project Checklist¶

Abstract

This guide provide useful check lists for you to express and prepare your requirements. It also provides the typical organisation and non technical requirements for you to consider before contacting the punch team for a pricing or any other architectural or professional advises.

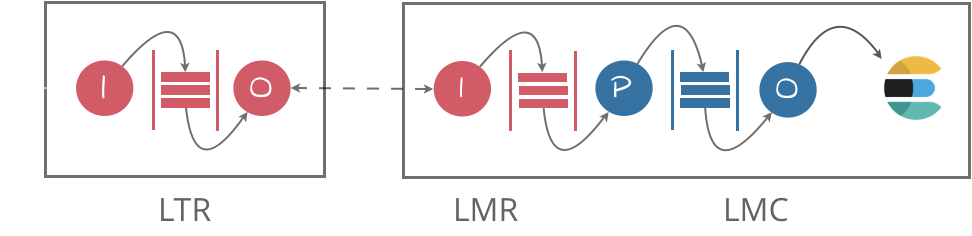

In the following we will refer to three acronyms depicted hereafter:

- LTRs are small or medium configurations (1 or 3 servers) in charge of collecting and forwarding the events to a central site

- LMRs are the receiving counterparts.

- LMC refers to the backend central system receiving processing, indexing and storing the events.

It turns out each is a punch configuration with more or less components and capabilities. It is however clearer to refer to each variant as each impose different requirements.

Event Collection¶

- Identify the pull versus push mode for collecting logs and make sure it is supported by the punch.

- Supported Push Modes

- snmp

- Netflow (v5/v9)

- UDP

- TCP/TLS

- RELP

- Lumberjack

- All supported Logstash input push plugins

- Supported Pull Modes

- files

- Kafka

- azure blob storage

- All supported Logstash input pull plugins

- Identify filtering capabilities, if any, required at the LTR side. This is typically used to suppress unimportant logs to compensate for not being able to reconfigure the customer equipments to do that upfront

- Review with the punch team the required processing resources

- Identify the need of rate limiting, if any.

- rate limiters can be used to smooth traffic peaks and burst and can be activated at various LTRs processing points.

- using rate limiters has, of course, an impact on internal event queuing

Event Shipping¶

- Make sure all the required network and security equipments and components are identified together with:

- VPNs/IPSec/TLS certificates

- Protected remote accesses

- Provide to the punch professional team the following information to check for the required network bandwidth:

- event per second rate (eps)

- average event size (i.e 1k)

-

Ensure the LTRs are configured using the punch load-balancing capabilities OR the project provides the required load balancers and virtual IP infrastructures.

- Punch load balancing and multi-site/room features are strongly preferred.

-

Express the precise expected SLAs in terms of:

- Maximum network failure time: this must be expressed in hours or days. It is used to set the retention capacity of LTRs so as to keep buffering the events until network is back.

- Mean end-to-end time from event collection to visualization: this time is indicative. It will be affected by any failure or unexpected traffic peak.

- Log loss : in almost all punch deployment events cannot be lost in case of (network/hardware or component) failure. However this does not necessarily apply to all event types (i.e. business/security logs versus monitoring metrics)

- Make sure the punch provided a shipping protocol that meets your requirements.

- Most often the lumberjack acknowledged protocol is used, with compression activated.

- Acknowledgement is required if you need to ensure each event is processed at least once.

- qualify your networks

- MUST: Use the punch injector test tool to check that this capacity is effectively provided. This tool tests roundtrip capacity of any network connection using the lumberjack target protocol.

- (MUST) : plan with the punch team the required monitoring architecture

- LTRs forward system and applicative metrics to the LMRs/LMCs. This in turn allow LTRs remote monitoring and alerting from the central system.

- Metrics forwarding MUST be identified and a transport protocol chosen (UDP/TCP/Lumberjack are supported).

Event Processing¶

- Review the security architecture with the punch team.

- Identify upfront the type of logs to be parsed

- check by yourself and review with the punch team the availability of the corresponding parser

- make sure your or your customer(s) does not expect unrealistic search capabilities

- Identify cold archiving requirements

- Retention time (usually 13 months)

- Target storage solution : object storage, storage bay or plain filesystems

-

Identify the expected extraction usages

- Plain extractions only : massive versus filtered

- Log replay for providing Kibana search capabilities on frozen logs

-

Identify the functional services expected from the LMC:

- Forensic and investigation

- How many tenant ?

- How many roles (RBAC) per tenant ?

- Does the customer design dashboard on its own ?

- KPIs :

- these usually require additional aggregation processing jobs that, in turn, require processing resources

- CEP-based Alerting

- Complex event processing rules can be inserted in the log stream. They requires processing (cpu/memory) resources.

- Machine learning and arbitrary batch or stream processing.

Important

Even if not all features or capabilities are required you should prepare to eventually add these. Knowing that upfront is important as it may require the provisioning of additional resources (like spark nodes). This can in turn influence your architecture.

Event Forwarding¶

Often the punch is used to forward events to a third-party siem like Qradar.

- Identify rate limiters, if any

- Express the expected format for each type of logs:

- Most often only raw logs are forwarded. In some case punch performs some adaptation.

- Express the third-party component expected SLAs:

- events forwarded to third-party components are usually not acknowledged. It is not possible to ensure all eve therefore properly handled.

- It is however possible to strongly limit the probability this happens.

- Discuss and review these issues with the punch team.

- Is log ordering required ? this affects the design of punch channels.

- Log ordering is only important if the next component relies on stateful complex detection rules.

Platform Build¶

- Make sure you have a complete and correct design and architecture specification.

- Share it with the punch support team.

- SHOULD: Plan for providing punch build trainings to the project build team

- MUST: plug the external monitoring hypervisor to the punch monitoring API

- MUST: plan for the punch internal monitoring solution.

- This requires an elasticsearch cluster, the setup of Kibana dashboards, and (strongly advised) alerting rules in addition to the ones provided by the external monitoring tool.

- MUST: define and document a validation strategy.

- Use the punch log injectors to stress the platform upfront and effectively measure the expected SLAs.

- make sure the corresponding injector files are part of the platform configuration and shared with the support team. These will be used during the project lifetime and are a key asset.

Platform Operations¶

- MUST : plan for providing punch maintenance and operation trainings to the project team

Project Organization¶

- MUST : identify all the project team members, and provide them with a punch support account on the punch helpdesk. The project PDA MUST be identified.

- MUST : setup a complete representative setup on a standalone. This task can be achieved through the punch functional training. It consists in:

- Designing or identifying log injector files for the target event types

- Designing the complete LTRs/LMRs/LMC setup.

- Running the complete solution (on a laptop)

- Sharing the resulting configurations with the punch support team

- SHOULD : all project team members (deploy/run/functional) should have a laptop with the following requirements:

- 16Gb ram. 32Gb preferred.

- Ubuntu 18 or Macos. Native Unix OSes are strongly recommended.

- Punch standalone release installed. It is free.