Syslog Input¶

Using the syslog input you have a number of ways to read data from external applications.

The syslog input reads binary or textual data from an UDP or TCP socket.

Info

This input node is, strictly speaking, more than a syslog input. It can deal with syslog and non syslog data. In can deals with TCP and UDP textual and binary data. When used with textual data it supports the syslog usage and protocol, hence its name.

When TCP is used, textual data is expected and the new line (0a in hex) character is used to delimit lines. With UDP you can choose a so-called codec to handle textual or binary data. Delimiting is not an issur with UDP as entire records are received from the network layer.

The socket level configuration items are defined in a listen subsection of the node configuration. Here is an example of a TCP server listening on all network interfaces on port 9999:

type: syslog_input

settings:

load_control: none

rcv_queue_size: 10000

listen:

proto: tcp

host: 0.0.0.0

port: 9999

delimiter: end_of_line

Info

Refer to the syslog javadoc documentation.

Textual Data¶

This section applies to TCP incoming textual data only.

Incoming Line Delimiter¶

By default line are read and expected to be terminated with either '\n' or "\r\n" characters. You can however request three different strategies.

Warning

If your punchline declares several syslog_input node with different delimiter strategies, you must set the setting single_thread to false.

Custom Enf-Of-Line Delimiters¶

You can set explicitely the end of line delimiters using the following configuration.

type: syslog_input

settings:

load_control: none

listen:

proto: tcp

host: 0.0.0.0

port: 9999

delimiter: end_of_line

delimiters:

- "\n"

- "\r\n"

- "\0"

Octet Counting¶

To use RFC 6587 octet counting strategy, use the following settings:

type: syslog_input

settings:

load_control: none

listen:

proto: tcp

host: 0.0.0.0

port: 9999

delimiter: octet_counting

Auto Detection Mode¶

Some equipments send a mix of octet counting and end-of-line frames over the same TCP connection. This is rare but it may happen. The syslog input node supports this behavior that is not clearly forbidden by the various syslog RFCs. Use the following configuration:

type: syslog_input

settings:

load_control: none

listen:

proto: tcp

host: 0.0.0.0

port: 9999

delimiter: auto_detect

delimiters:

- "\n"

- "\r\n"

- "\0"

Plain Tcp Frames¶

To use no end of line delimiter and simply read in the tcp frames as is, use the following settings:

type: syslog_input

settings:

load_control: none

listen:

proto: tcp

host: 0.0.0.0

port: 9999

delimiter: none

Warning

You will (typically) need a stateful punchlet to decode of frame it correctly. Make sure you understand load-balancing issues.

Binary Data¶

This mode is only supported using UDP (unicast or multicast) data. Use the codec property to either "string" (the default value) or "bytes" to indicate what you want to receive. For example

- type: syslog_input

settings:

listen:

proto: udp

host: 0.0.0.0

port: 9902

codec: bytes

- stream: logs

fields:

- log

the codec property here is part of the listen dictionary. This is not the same property than the multiline codec that is part of the settings dictionary

Streams And fields¶

The Syslog input receives log lines. That line is emitted in the topology as a Tuple field. Here is how you name the corresponding stream and field.

- type: syslog_input

settings:

listen:

proto: tcp

host: 0.0.0.0

port: 9902

- stream: logs

fields:

- log

The syslog input can be configured to emit additional informations to keep track of the sender/receiver addresses, as well as unique identifiers and timestamp. You add these fields by specifying the reserved punch fields :

- type: syslog_input

settings:

listen:

proto: tcp

host: 0.0.0.0

port: 9902

publish:

- stream: logs

fields:

# the field that will hold the received data. This is not a reserved field.

- log

# the peer source ip address

- _ppf_local_host

# the peer source ip port

- _ppf_local_port

# the local listening ip address

- _ppf_remote_host

# the local listening port

- _ppf_remote_port

# the receiving time

- _ppf_timestamp

# a unique identifier

- _ppf_id

- stream: _ppf_metrics

fields:

- _ppf_latency

You can add none, some or all of these additional fields. Reserved streams and fields are documented in the javadoc:

Latency Tracker Tuple¶

Besides emitting the received data, you can configure the syslog input to generate and emit periodically a so-called punch latency tracker tuple. That tuple will traverse your channel and keep track of the traversal time at each traversed node. Here is how you configure the syslog input to emit such record every 30 seconds.

- type: syslog_input

settings:

listen:

proto: tcp

host: 0.0.0.0

port: 9902

self_monitoring.activation: true

self_monitoring.period: 30

- stream: logs

fields:

- log

- stream: _ppf_metrics

fields:

- _ppf_latency

Notice how you must decalre a published stream with reserved names abd values

_ppf_metrics and _ppf_latency.

Refer to the monitoring guide use the resulting measured latency to monitor your stormlines.

Multiline¶

The Syslog input supports multi-line reading, it will then aggregate subsequent log lines. A subsequent line is detected using either a regex or a starts-with prefix. Aggregating multi lines requires to possibly wait for the last ones, hence you need to set a timeout to emit what has been received should the sender not send a subsequent line for a too long time, while keeping its TCP socket open.

You can also set a delimiter string should you require a special tag to separate your lines in some of your downstream processing.

type: syslog_input

settings:

listen:

proto: tcp

host: 0.0.0.0

port: 9902

codec: multiline

multiline.startswith: "\t"

multiline.delimiter: " "

multiline.timeout: 1000

Warning

The aggregated line contains the matching regex or starting prefix. These are not replaced. Should you need to do that you can use a downstream punchlet.

Optional Parameters¶

-

load_controlString : "none"

the syslog input will limit the rate to a specified value. You can use this to limit the incoming or outgoing rate of data from/to external systems. Check the related properties

-

load_control.rateLong : 10000

Only relevant if load_control is set to limit the rate to this number of message per seconds.

-

load_control.adaptativeBoolean : false

If true, the load control will not limit the traffic to load_control.rate message per second, but to less or more as long as the topology is not overloaded. Overload situation is determined by monitoring the Storm tuple traversal time. If that traversal TODO

-

rcv_queue_sizeInteger : 10000

To each configured listening address corresponds one running thread, which reads in lines and stores them in a queue, in turn consumed by the syslog input executor(s). This parameter sets the size of that queue. If the queue is full, the socket will not be read anymore, which in turn will slow down the sender. Use this to give more or less capacity to accept burst without impacting the senders.

-

listen.max_line_lengthInteger : 1048576

This is the maximum frame size in TCP mode : if incoming data exceeds this amount without an end of line delimiter being received (\n), then an exception may occur, that will be notified in the topology worker log file. e.g : 2019-07-01 17:22:34.707 c.t.s.c.p.c.n.i.NettyServerDataHandler [INFO] message="socket level exception" cause=frame length (over 1052924) exceeds the allowed maximum (1048576) 2019-07-01 17:22:34.708 STDIO [ERROR] io.netty.handler.codec.TooLongFrameException: frame length (over 1052924) exceeds the allowed maximum (1048576) 2019-07-01 17:22:34.709 STDIO [ERROR] at io.netty.handler.codec.LineBasedFrameDecoder.fail(LineBasedFrameDecoder.java:146)

This setting is to be provided in the 'listen' section of the syslog input settings.

-

single_threadBoolean: true

Define wether to use one or several thread to manage the syslog_input node. There is no need to set false except if you plan to use different delimiter strategy inside the same punchline.

The codec is an optional parameter which can take the following values :

-

json_array:With the json_array codec, the log will be streamed continuously in a way to delimit json array elements. Each element is emitted as a separated tuple.

-

xml:With the xml codec, the file is streamed continuously in a way to delimit xml top level elements. Each element is emitted as a separated tuple.

-

multiline:several lines will be grouped together by detecting according to a multiline pattern. This is typically used to read in java stacktraces and have them emit in a single tuple field.

Use the 'multiline.regex' or 'multiline.startswith' to set the new line detection option.

Specific parameters for multiline codec :

-

multiline.regexString

set the regex used to determine if a log line is an initial or subsequent line. You can also set a specific delimiter and a timeout

-

multiline.startswithString

set the regex used to determine if a log line is an initial or subsequent line. You can also set a specific delimiter and a timeout

-

multiline.delimiterString : "\n"

Once completed the aggregated log line is made up from the sequence of each line. You can insert a line delimiter to make further parsing easier.

-

multiline.timeoutLong : 1000

If a single log line is received, it will be forwarded downstream after this timeout, expressed in milliseconds.

Metrics¶

SSL/TLS¶

To learn more about encryption possibilities, refer to this SSL/TLS configurations dedicated section.

Socket Configuration¶

The listen section can take the following parameters:

listen:

# the listening protocol. Can be "tcp" or "udp"

proto: tcp

# the listening host address. Use "0.0.0.0" to listen on all interfaces

host: 0.0.0.0

# the listening port number

port: 9999

# Enable compression. If true, clients must be configured with compression enabled

# as well

compression: false

# Enable SSL.

# Not mandatory, false by default

ssl: true

# Select your encryption provider, "OPENSSL" or "JDK"

ssl_provider: JDK

# key and Certificates.

# Mandatory if ssl is enabled.

ssl_private_key: /opt/keys/punchplatform.key.pkcs8

ssl_certificate: /opt/keys/punchplatform.crt

ssl_trusted_certificate": /opt/keys/cachain.pem

# Close inbound connections if the clients sends no data.

# Use 0 to never timeout.

# Not mandatory: 0 by default

read_timeout_ms: 30000

# Only for udp

# Not mandatory: false by default

# if true then NioDatagramChannel with ipv4 else default constructor (ipv6)

ipv4: true

# Only for udp

# Not mandatory: 2048 by default

# if define it sets the max size of a udp packet

max_line_length: 2048

# Possible values are "end_of_line", "none" or "octet_counting"

delimiter: end_of_line

# Only relevant with "delimiter" : "end_of_line"

delimiters: [ "\n", "\r\n" ]

# Only for udp

# Not mandatory: 2048000 by default.

# Size (in bytes) of the socket level UDP reception buffers .

# Should more data arrives before the syslog input reads it, you will

# experience data loss. This is, of course, the way UDP works.

# This value cannot exceed the maximum value set at OS level

# (sysctl net.core.rmem_max)

recv_buffer_size: 350000

}

Info

udp: each line is received as a UDP packet. Hence the line length is limited to 64K. Multiline is not supported on UDP.tcp: TCP sockets can be configured with SSL/TLS support, and/or socket level compression

UDP Multicast¶

UDP multicast is supported. Here is an example configuration:

listen:

# multicast only works for udp

proto: udp

# Valid multicast ranges are 224.0. 0.0 to 239.255. 255.255

host: 230.0.0.0

# the listening port number

port: 9999

# A network interface is required for multicast

interface: eth1

# choose between binary and string

codec: binary

# Only for udp

# Not mandatory: 2048 by default

# if define it sets the max size of a udp packet

max_line_length: 2048

# Only for udp

# Not mandatory: 2048000 by default.

# Size (in bytes) of the socket level UDP reception buffers .

# Should more data arrives before the syslog input reads it, you will

# experience data loss. This is, of course, the way UDP works.

# This value cannot exceed the maximum value set at OS level

# (sysctl net.core.rmem_max)

recv_buffer_size: 350000

}

Monitoring¶

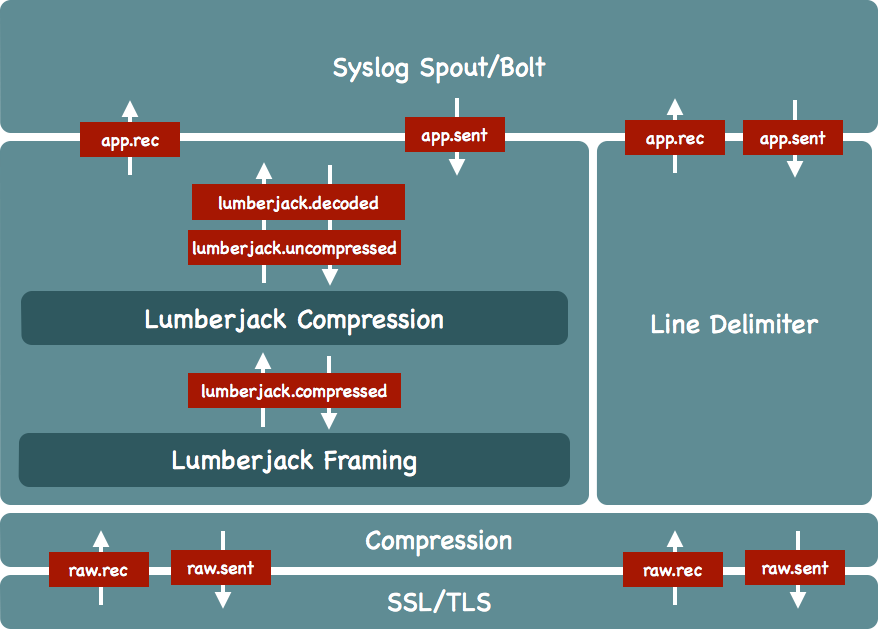

The following picture summarizes the configurable protocol stack you can plug in by configuration. The important reported metrics are also highlighted.