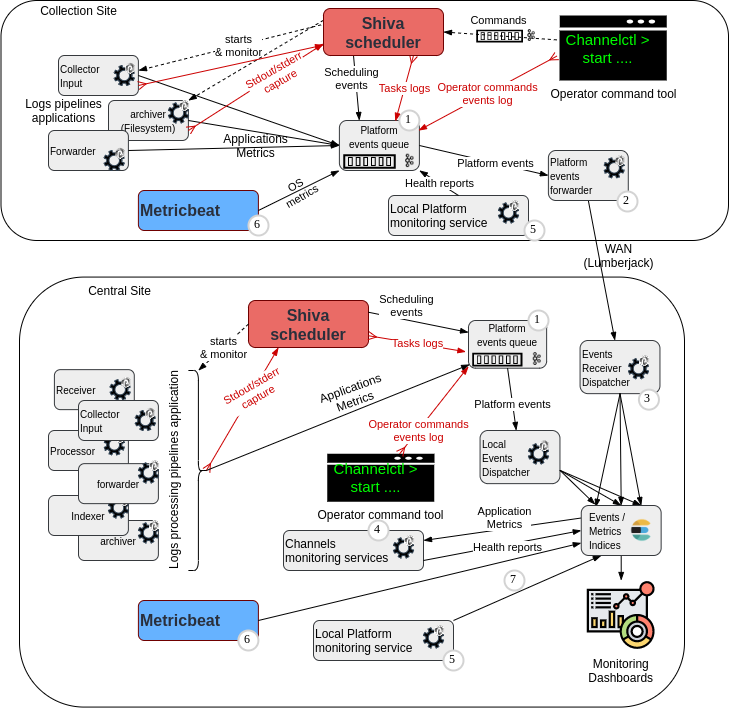

Log Collector : Platform Events forwarder punchline (2)¶

Note

click on this link to go to Central Log Management platform monitoring events overview

Key design highlights¶

JSON encoding¶

Warning

For compatibility purpose with 'beats' inputs (metricbeat at least) the message in the platform-events Kafka topic is in JSON format, not Lumberjack.

Please refer to reference punchline example for appropriate configuration of the Lumberjack input node.

Transport / interface / HA¶

The forwarding of platform events is done using Lumberjack protocol, as we would for other cybersecurity logs or events that we do not want to lose. For example, the operator actions must not be lost for 2 reasons :

- because they are part of the audit trail of the operator actions.

- because they are used to trigger the channels monitoring service on central site (i.e. an application is monitored only if the last known operator action for this application is a 'start').

With this acknowledged protocol, if there is a failure to handle the data on the remote central site, then the data will be replayed later for forwarding.

The lumberjack_ouput node is able to target multiple target servers on the central site. This implements load balancing and high availability of the forwarding mechanism.

Reference configuration example¶

Monitoring - Events forwarder punchline¶

version: "6.0"

runtime: shiva

type: punchline

# The purpose of this punchline is to send various types of platform events to different indices

# so that they have different retention rules.

# This is important because all platform events (platform metrics, operator commands audit events, health monitoring status...)

# are coming through a single events flow (especially when coming from remote platforms) .

# This is a reference configuration item for DAVE 6.0 release - checked 21/07/2020 by CVF

dag:

- type: kafka_input

settings:

topic: platform-monitoring

# This topic receives metric or events from the platform components, but directly stored in

# a json string (therefore the value_codec setting) in the kafka document (no Lumberjack envelope,

# contrary to what is usual in other kafka topics of the punchplatform.

# This is the standard way for beats to write to kafka (among which, the metricbeat we are

# using to collect

value_codec:

type: string

# Each time we restart/crash, we continue from last checkpoint to avoid dropping tuples

start_offset_strategy: last_committed

# In case no checkpoint is remembered for this topic and consumer group, then start from the top of the Kafka topic

auto.offset.reset: earliest

fail_action: sleep

fail_sleep_ms: 50

publish:

- stream: docs

fields:

- doc

- type: lumberjack_output

settings:

destination:

- host: centralfront1

port: 1711

compression: true

ssl: true

ssl_client_private_key: "@{PUNCHPLATFORM_SECRETS_DIR}/server.pem"

ssl_certificate: "@{PUNCHPLATFORM_SECRETS_DIR}/server.crt"

ssl_trusted_certificate: "@{PUNCHPLATFORM_SECRETS_DIR}/fullchain-central.crt"

- host: centralfront2

port: 1711

compression: true

ssl: true

ssl_client_private_key: "@{PUNCHPLATFORM_SECRETS_DIR}/server.pem"

ssl_certificate: "@{PUNCHPLATFORM_SECRETS_DIR}/server.crt"

ssl_trusted_certificate: "@{PUNCHPLATFORM_SECRETS_DIR}/fullchain-central.crt"

subscribe:

- component: kafka_input

stream: docs

metrics:

reporters:

- type: kafka

reporting_interval: 60

settings:

topology.max.spout.pending: 6000