Lumberjack Input¶

The Lumberjack input node is similar to the TCP Syslog input node, but instead of bytes, lumberjack frames are the base unit.

A lumberjack frame is a binary encoded set of key value pairs. It makes it possible to send map structures onto TCP socket, and benefit of a per frame acknowledgement.

Here is a complete configuration example. The [listen] section is identical to the Syslog input one. The lumberjack inpout accepts additional keep options in particular keepalives, so as to periodically send keep alive message to the clients to check for connection aliveness.

Two options are supported to react to dead peer sockets : the

read_timeout_ms property or the keep_alive properties.

Here is an example using the read socket timeout.

{

"type": "lumberjack_input",

"settings": {

"rcv_queue_size": 10000,

"listen": {

"host": "0.0.0.0",

"port": 9999,

"compression": true,

"ssl": true,

"ssl_provider": "JDK",

"ssl_private_key": "/opt/keys/punchplatform.key.pkcs8",

"ssl_certificate": "/opt/keys/punchplatform.crt",

"ssl_certificate": "/opt/keys/ca.pem",

# If no data is received for 30 seconds, then perform the read_timeout_action

"read_timeout_ms": 30000,

# In case a zombie client is detected, close the socket.

# An alternative "exit" action makes the whole JVM exits, so as to restart the topology altogether

"read_timeout_action": "close",

# You can also decide what to do should a tuple failure occur. The default

# strategy ("close") consists in closing the peer socket. The peer client will reconnect

# and resume sending its traffic. This is overall the best strategy because it is very unlikely

# to suffer from just one data item failure. In most case you have lots of failure because of a

# problem writing the data to a next destination (kafka, elasticsearch, etc..). Closing the socket # and causing a socket reconnection is at the end as robust as just sending the fail message for

# one particular data item.

# It is even as good top configure the action as "exit". In that case, the entire JVM will

# exit then be restarted. It gives more time for an overloaded system to recover.

"fail_action": "close",

# If the node receives a window frame then it will acknowledge only the window.

# Else the node will acknowledge all lumberjack frames.

# By default "auto" but it can be fixed to "window" or "frame".

"ack_control": "auto"

}

}

}

Alternatively you can use a keep alive mechanism as follows :

{

"type": "lumberjack_input",

"settings": {

"rcv_queue_size": 10000,

"listen": [

{

"host": "0.0.0.0",

# send keep alive message every 30 seconds

"keep_alive_interval": 30,

# if the corresponding ack is not received after 20 seconds, close the socket

"keep_alive_timeout": 20,

...

}

]

}

}

Warning

the support for keepalive sent from the punch output to input lumberjack nodes implemented only starting at version Avishai v3.3.5. Do not use if your LTRs are running an older version.

SSL/TLS¶

To learn more about encryption possibilities, refer to this SSL/TLS configurations dedicated section.

Streams and fields¶

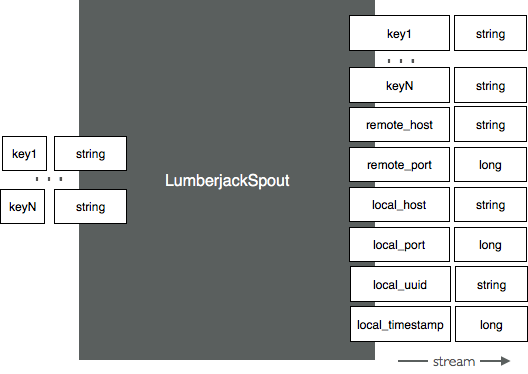

The Lumberjack input node emits in the topology a tuple with the fields received in the Lumberjack frame plus 6 optional fields. The optional fields are used to vehiculate (respectively) the remote (local) socket IP address, and the remote (local) socket port number, a unique id and a timestamp. This is summarized by the next illustration:

You are free to select any of the 6 optional reserved fields among :

- _ppf_timestamp : the number of milliseconds since 1/1/1970, timestamp of entry of the document in PunchPlatform

- _ppf_id : a unique id identifying the document, allocated at entry in PunchPlatform

- _ppf_remote_host : the address of the sender of the document to the PunchPlatform

- _ppf_remote_port : the tcp/udp port of the sender

- _ppf_local_host : the address of the punchplatform receiver of the document

- _ppf_remote_port : the tcp/udp port on which the document was received by PunchPlatform

Normally, these fields are first generated at the punch entry point (in a SyslogInput for example), therefore, if these fields are present in the incoming Lumberjack message, the values will be preserved and emitted in the output field with the same names. This is activated only if the corresponding publish stream is defined.

If these fields are not present in the incoming Lumberjack message, then the lumberjack input node will generate default values for these fields, assuming that this node is the actual punch entry point.

Note

For compatibility with BRAD LTRs, the input node will take care of translating BRAD-style 'standard' tuple fields into CRAIG reserved fields :

- local_timestamp => _ppf_timestamp

- local_uuid ==> _ppf_id

- local_port ==> _ppf_local_port

- local_host ==> _ppf_local_host

- remote_port ==> _ppf_remote_port

- remote_host ==> _ppf_remote_host

Therefore, if a tuple is received with the log unique id in 'local_uuid' field, but '_ppf_id' field is published to storm stream by the LumberjackSpout storm_settings, then the output value will be the one received in local_uuid.

You must also name explicitly the lumberjack fields you wish to map as Storm tuple field. Here is an example to process data from a Logstash forwarder or from a PunchPlatform LTR.

{

"type": lumberjack_input",

"settings": {

...

},

"component": "lumberjack_input",

"publish": [

{

"stream": "logs",

"fields": [

"log",

"_ppf_remote_host",

"_ppf_remote_port",

"_ppf_local_host",

"_ppf_local_port",

"_ppf_id",

"_ppf_timestamp"

]

}

]

}

Optional Parameters¶

-

load_control: StringIf set to , the node will limit the rate to a specified value. You can use this to limit the incoming or outgoing rate of data from/to external systems. Check the related properties.

-

load_control.rate: StringOnly relevant if load_control is set to . Limit the rate to this number of message per seconds.

-

rcv_queue_size

Integer : 10000

To each configured listening address corresponds one running thread, which reads in lines and stores them in a queue, in turn consumed by the syslog input executor(s). This parameter sets the size of that queue. If the queue is full, the socket will not be read anymore, which in turn will slow down the sender. Use this to give more or less capacity to accept burst without impacting the senders.

Compression¶

The lumberjack node supports two compression modes. If

you use the compression parameter, compression will be

performed at the socket level using the Netty ZLib compression. If

instead you use the lumberjack_compression parameter,

compression is performed as part of Lumberjack frames.

Tip

Netty compression is most efficient, but will work only if the peer is a punch Lumberjack node. If you send your data to a standard Lumberjack server such as a Logstash daemon, use lumberjack compression instead.

Metrics¶

Refer to the lumberjack input javadoc documentation.