Troubleshooting Slow LTR-LMR connection¶

Symptoms¶

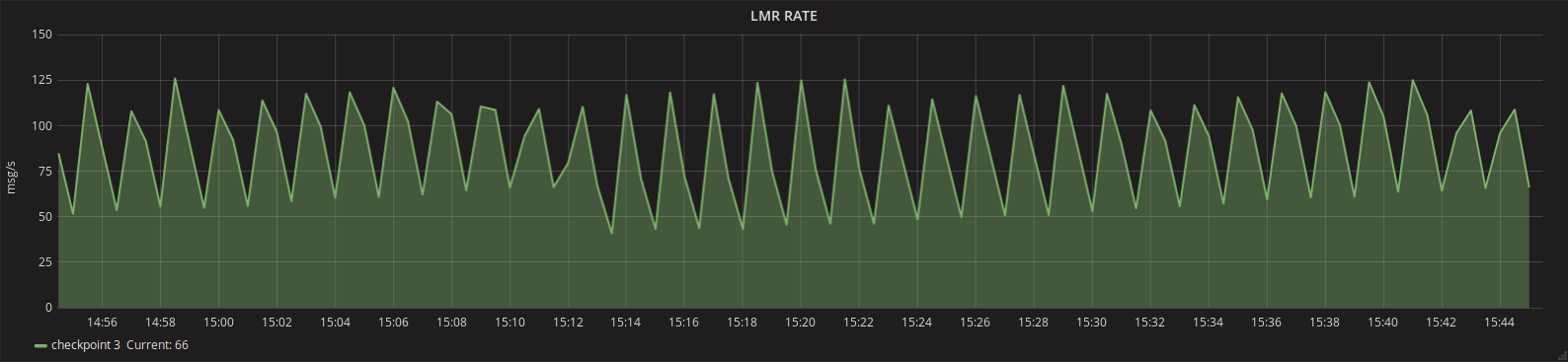

During the integration, sometimes the flow between LTR and LMR is very slow, i.e. only few hundred of Events Per Seconds (EPS).

There are two usual reasons

- punch configuration issue.

- Network issue

Punch configuration issue¶

During the log transport, the logs are stored in a queue (kafka) before sending them to the LMR. The component responsible for this function read the queue and wait an acknowledgement. Frequently, the network allows a large bandwidth but is a little slow, so many logs are stored in memory directly in the component (topology).

To prevent an Out of Memory, the punchline.max.spout.pending setting is set.

If the topology.max.spout.pending is too slow, the component stops reading the queue before receiving acknowledgements. It produces a significant drop of performance.

We recommend updating this setting with the following table

EPS(avg) topology.max.spout.pending

under 1000 600

between 1000 and 5000 6000

between 5000 and 10k 12000

above 10k 30000

Network Issues¶

Between LTR and LMR,the lumberjack acknowledged protocol is used. This protocol is two-ways but only little information goes upstream. This protocol leverages some keepalive mechanism that will help you to detect slow network issues.

Check you must see on worker logs (before and after increasing and decreasing rate) :

2017-11-13 16:15:00.283 c.t.s.c.p.c.n.i.NettyLumberJackClientImpl [WARN] message="keepalive timeout reached" now=60046 timeout=60000

The issue is a lumberjack timeout during the keepalive test. Check your bandwidth capacity using the punchplatform-injector.sh too, One check also the proper MTU settings or your link.