Administration¶

In contrast to many large and complex big data platforms, the punch focuses on and enforces simplicity:

- Your complete deployment architecture is described using only two configuration files.

- Every processing application is described using a simple and readable configuration file. It can be fully audited before being submitted to execution.

- The punch way of designing application frees end users to know the details of data accesses.

- End-point addresses and security parameters are not spread in these configuration files.

- Instead a resolver module is in charge of enforcing the secure and controlled resolution of these parameters.

These patterns and features are key to render a punch platform simple hence easy to secure, despite a possibly large number of applications, servers and data access points.

This said, not every user interact with the same data, commands or components. Let us first review the various users at stakes, what actions they typically perform on the system or application, and last what protection is put in place for each one of them.

User Types and Roles¶

End Users¶

End users are using only the data products generated by the various processing applications. Data products are only exposed with secured and multi-tenant access points.

The punch is often used to expose end users with a Saas. Such users mostly use Kibana dashboards. However some end user can be offered programmatic API or higher level services for example to extract data.

Expert Users¶

Expert users are in charge of enriching the platform with additional applications and processing to ultimately generate and expose new data products, visualisation and dashboards to themselves and to end users. Examples will best illustrate the role of such expert users.

CyberSecurity Expert¶

Consider a cybersecurity Saas built on top of a punch. The incoming data is poorly structured. Cybersecurity experts are working on the platform on a daily basis to keep enriching and improving the data transformation processing, visualisation and tooling.

Cleaning, normalising that data is a crucial step in order to benefit from powerful search capabilities. The punch provides lots of feature to do this work. For example a compact programming language to enrich or update the log data transformation from raw content into normalised and enriched documents.

Say for example the system collects two types of logs received as raw strings. The first type has a key-value format. Here is one indicating that a malware has been detected.

date=2018-11-02T10:41.00 ip=10.10.10.1 message=malware detected

Another type of data is received in JSON format from another equipment. Here a firewall recorded a connection from our corrupted device probably to internet.

{{"timestamp"="1541151767",source"="10.10.10.1","deviceType"="firewall","event"="traffic allowed"}}

From the raw data, it is easy to perform a (human) correlation between these two events: the system has a security breach. In a big data context, it is of course impossible to navigate so much data. Instead, expert users query the data to select events and discard noise. However if only raw data is recorded, it is still extremely complex to perform a efficient query. If instead the two previous events were stored as follows:

{

"alarm": {

"name": "malware detected"

},

"init":{

"host":{

"ip": "10.10.10.1"

}

},

"obs":{

"ts":"2018.11.02T10.41.00.000Z"

},

"type": "nids"

}

{

"alarm": {

"name": "traffic allowed"

},

"init":{

"host":{

"ip": "10.10.10.1"

}

},

"obs":{

"ts":"2018.11.02T10.41.01.000Z"

},

"type": "fw"

}

It would be simple and efficient to query the data using SQL-like capabilities to quickly find out first the malware infection, then the virus propagation. This short example highlights the role of the domain (here cybersecurity) expert. He has to adapt and improve the various configurations and parsers to constantly improve the data navigability.

Such user have more advanced access to the platform. True they are not administrators, They cannot stop an elasticsearch cluster. They may be allowed, however, to update a parser and reload the approriate parsing application.

Data Scientists¶

Just like cybersecurity experts work on parsers, visualisation and dashboards, data scientists work need accessing the data to perform predictions, compute IA models, deploy additional applications in a way to provide more insights to end users.

IA models are yet another resource computed then saved in the punch. Once calculated, a model can be uploaded in a processing pipeline to (say) detect anomalies on the real-time data.

This (short) description suffices to categorise data scientists as expert users in that they require safe and simplified accesses to the data and to additional tools.

Administrators¶

Administrators are responsible for:

- the maintenance of the application

- keeping up to date the capacity planning

- performing patching and non critical migrations.

- tracking issue and raising tickets to the punch help desk

User Actions¶

The following list present the different actions that can be executed on the punch platform. Identifying these along with the role of the corresponding users is a key part of the security assessment. Each project building a solution on top of the punch is assisted and encouraged to refine this list as part of its own project internal documentations.

End User actions¶

- connect to Kibana UI from an external network

- perform search and querying through kibana dashboard

- design new visualisation and dashboard, and save them for later retrieval.

- interact with punch UI plugins to

- perform data extraction

- test a parser, a grok or dissect pattern

- save/load resources through the resource manager punch UI

- programmatically interact with the Punch REST Gateway to submit elasticsearch queries and retrieves the results.

Expert Users Actions¶

In addition to end user actions:

- connect to advanced possibly multi-tenant kibana instances from internal networks

- access through ssh to the punch operator environment from internal network

- this may or may not be granted

- start or stop a restricted set of applications.

- design and run a batch processing punchlines : aggregations, machine learning

- update a processing function (punchlet)

- update the configuration for a given tenant and?or channel

Administrator Actions¶

In addition to expert user actions, an administrator can:

- connect to the terminal operator environment using ssh from internal network only

- perform administrative actions as documented by the punch administrator guides

- connect to all devices with sudoers account from internal network

Important

It is possible (and advised) to prevent administrators to execute configuration changes to applications. It is also possible (and again advised) to prevent administrators to have access to the tenant data. These require more advanced setups at deploy time and is out of the scope of thus guide.

Users Access Control¶

End Users¶

The punch provides two data access points for exposing data to end users. A secured web UI based on kibana that provides:

- an intuitive UI to discover, view and search the consolidated data

- dedicated UIs to perform data extractions.

- various other UIs to access resources such as parser, machine learning model, applications.

In addition the punch provides a REST gateway server that can play two distinct roles:

- It can act as a proxy to further protect the data accesses from the UI.

- It expose data access APIs with both security protection and data protection.

- security means here to restrict what data can be accessed by what users

- data protection means preventing users to perform dangerous requests that could jeopardize the data tier performance

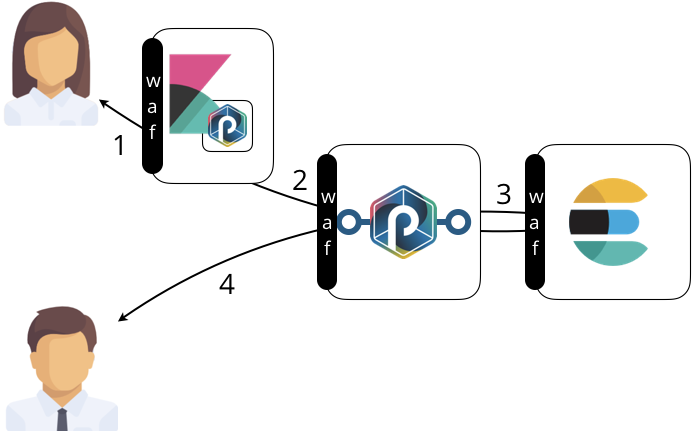

Here is an illustration of the resulting architecture with a focus on the elasticsearch database. Of course, other data sinks are protected similarly.

Note

The waf black symbols (Web Application Firewall) highlights the protection that are deployed to protect the data. These are explained in details in the data protection guides.

- The user connects and interact with the UI. This is a Kibana server equipped with the punch additional plugins.

- On behalf of the user, the UI submit a data access request to the punch REST gateway. Security credentials are part of that interaction so as to ensure access control.

- the REST gateway forwards the request to the appropriate backend. In this example, it consists in querying the elasticsearch database.

- Another user performs a similar request by directly targeting the REST gateway. This is another possible data access path.

In both cases, data access are protected.

Expert Users¶

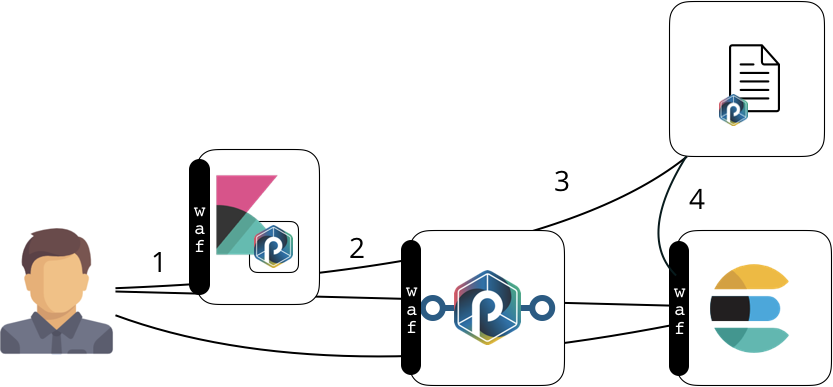

Here is an overview of what an expert user might be allowed to do.

Just like an end user access he can go through the UI and REST Gateway. In addition he is granted with an additional UI service to request the update or a parser, and/or the reloading of an application. These are the (1) (2) and (3) flows illustrated on our schema.

Important

Note that these interactions also go through the REST gateway. The benefit of this scheme, in addition to enforce a strong security architecture and access control, is also to free edge components such as Kibana UI the need to have direct access to the processing or data layers.

Notice the (4) new interaction. It represent the read/write data access performed by an application. This application is typically a punchline and perform parsing, aggregation, machine learning ar any other useful action. Notice that it also goes through the waf protection before being authorized to access elasticsearch data.

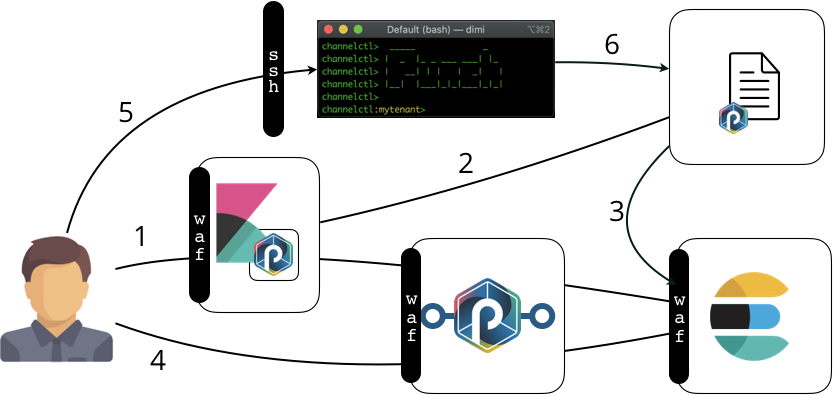

Administrators Access¶

Administrators are responsible for:

- monitor and maintain the system up.

- keeping up to date the capacity planning.

- performing patching and non critical migrations.

- raising tickets to punch help desk

Here is the access they may be granted:

As you guess, administrators can be granted controlled ssh terminal access so as to perform command line commands. These command provides the same capabilities than the one exposed through the UI plus more advanced operational commands to investigate, restore, or access the data and services.

Important

Whatever be the user and its access point, all actions are traced.

Features¶

Multi tenant by design¶

The punch is design from the ground to be multi tenant:

- the centralized configuration store all configurations in an isolated tenant-tree folder.

- All punch modules enforce a strong logical per tenant separation

- kafka topics

- elasticsearch indexes

- processing punchlines (spark storm or punch)

- archiving buckets/pools

All data accesses are similarly protected:

- Punch REST Gateway expose secured per tenant APIs.

- Kibana instances are deployed per tenant

- Applicative firewalls (WAFs) isolate the kibana access to the elasticsearch between several tenants. More details in Data protection section

Patching Procedures¶

The security level of a platform depends on the capability to patch vulnerabilities quickly. The punch provides an efficient way to manage vulnerabilities by patching the platform. Refer to the following helpful procedures: